NVIDIA Unveils DGX Spark and DGX Station Redefining AI Computing for 2025

By

Joseph Provence, a news contributor who writes about technology, small business, and e-commerce.

Apr 15,2025 1:30 PM MST

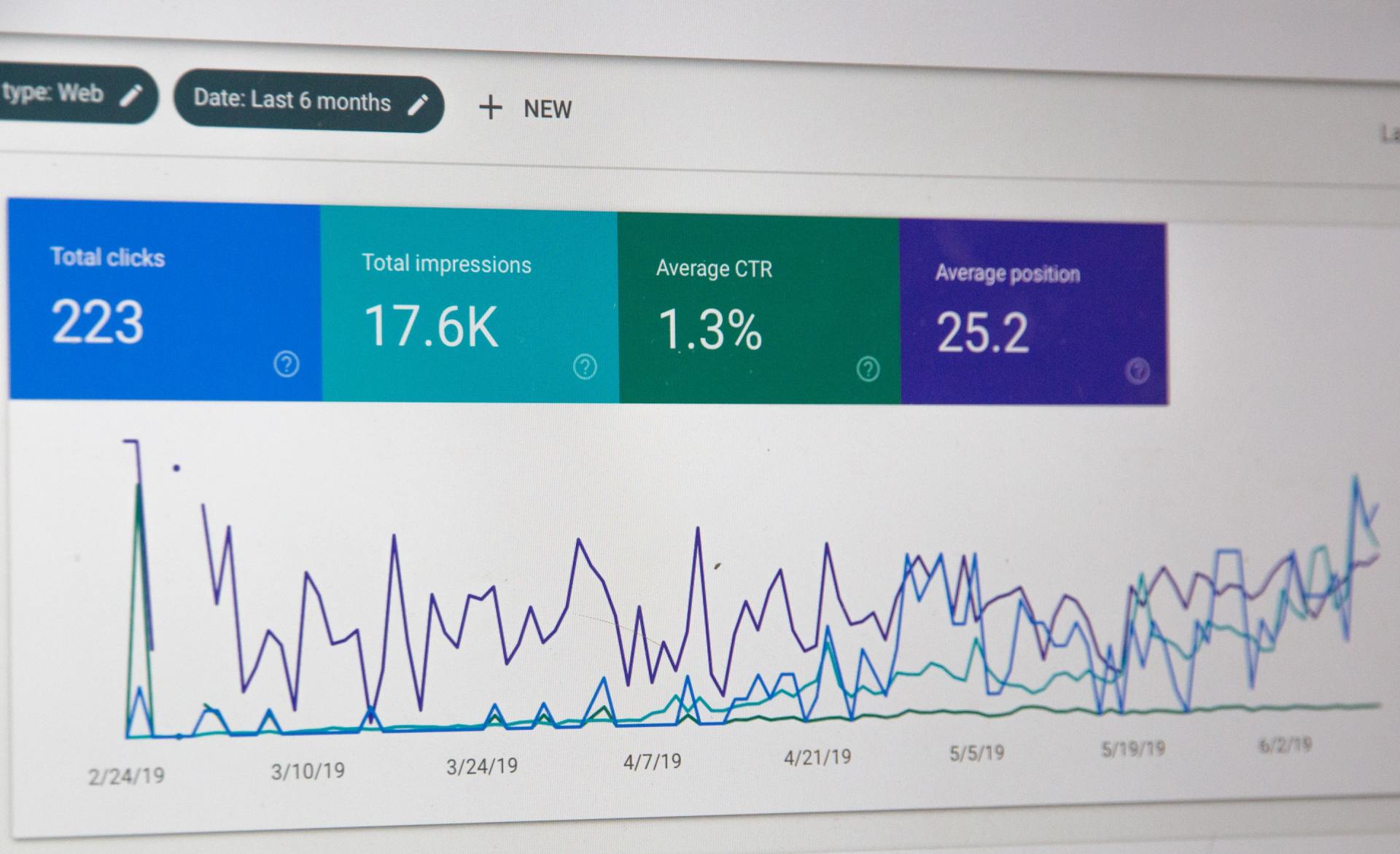

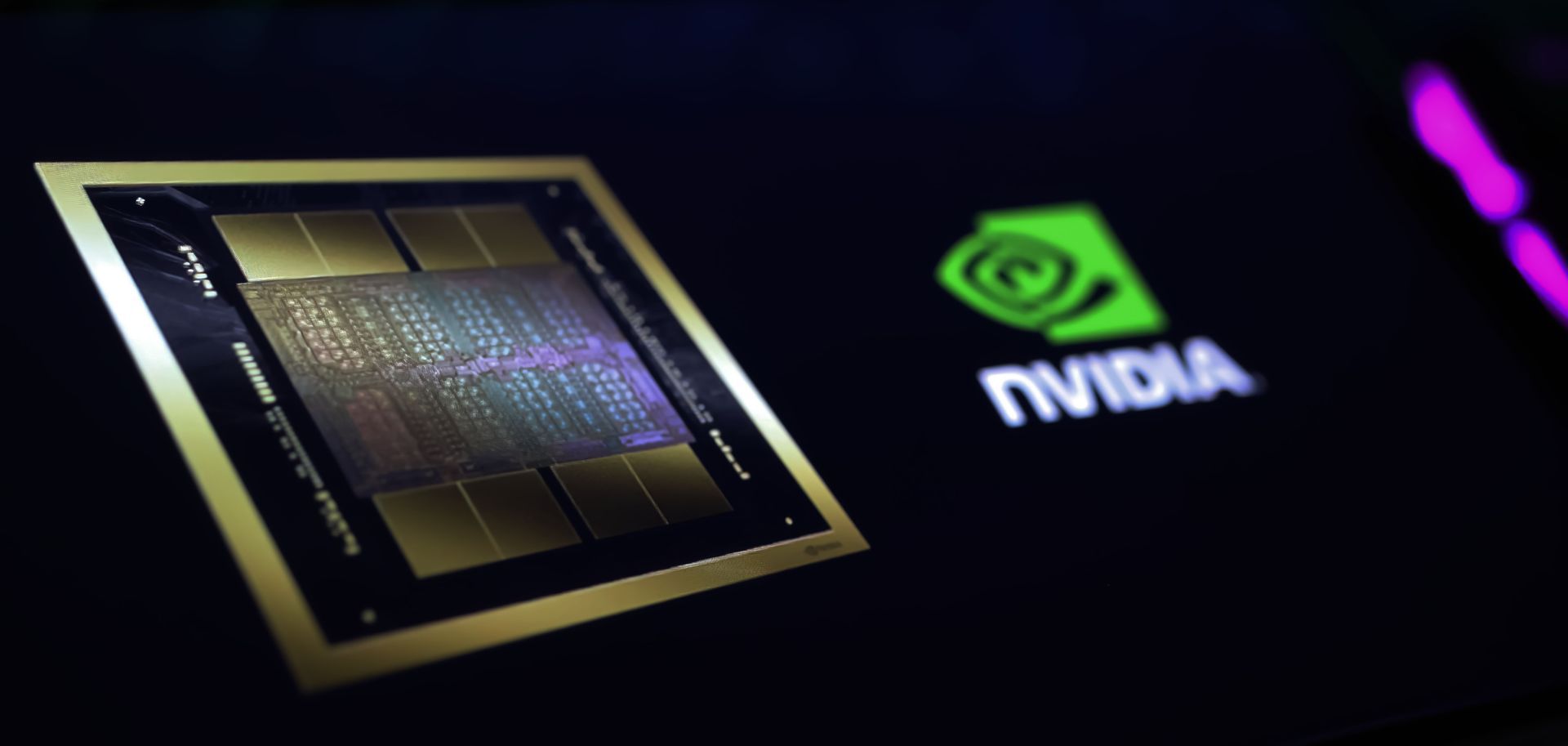

At NVIDIA’s recent GTC 2025 conference CEO Jensen Huang introduced two groundbreaking additions to the company’s AI hardware lineup: the DGX Spark and the DGX Station. These personal AI supercomputers aim to transform machine learning data science and large language model (LLM) development by bringing unprecedented computational power to desktop environments. With specifications tailored for AI professionals these systems promise to address key challenges in running LLMs locally though their high costs have sparked debate.

The DGX Spark previously known as Project DIGITS is a compact palm sized mini PC designed for AI developers researchers and students. Powered by the NVIDIA GB10 Grace Blackwell Super chip it delivers up to 1000 AI TOPS (trillions of operations per second) at FP4 precision and features 128GB of unified LPDDR5X memory. This unified memory architecture similar to Apple’s silicon designs allows seamless sharing between the CPU and GPU enabling the Spark to handle AI models with up to 200 billion parameters locally. Users can prototype fine tune and deploy models to NVIDIA’s DGX Cloud or other accelerated infrastructures with minimal code changes.

However the Spark’s memory bandwidth of 273GB/s has drawn criticism. While sufficient for many tasks it lags behind competitors like

Apple’s M4 Max (around 400GB/s) and NVIDIA’s own RTX 5090 (1700GB/s). For LLMs where memory bandwidth and capacity are critical for fast token generation this could limit performance compared to expectations. Priced at $3999 with an ASUS built Ascent GX10 variant at $2999 the Spark’s cost has raised questions about its value especially given its specialized DGX OS which may restrict versatility compared to general purpose systems.

In contrast the DGX Station is a powerhouse aimed at enterprise level AI workloads. Built around the GB300 Grace Blackwell Ultra Desktop Super chip it boasts 20 peta flops of AI performance and 784GB of unified memory including 288GB of GPU HBM3e memory with an impressive 8TB/s bandwidth and 496GB of CPU LPDDR5X memory at 396GB/s. This configuration makes it ideal for large scale training and inferencing of massive LLMs bypassing the memory constraints of consumer GPUs like the RTX 5090 which is limited to 32GB of VRAM.

The DGX Station A100 320G is priced at $149,000, while the 160G model is priced at $99,000.

The Station’s connectivity is equally robust featuring NVIDIA’s NVLinkC2C for chip to chip communication and ConnectX8 SuperNIC offering up to 800Gbps of networking bandwidth for clustering multiple units. This scalability is crucial for professionals building AI clusters though the inter machine NVLink bandwidth of 900GB/s introduces a potential bottleneck compared to internal speeds.

NVIDIA also teased the RTX Pro Workstation a mid tier option blending professional grade RTX GPUs in a desktop form factor though details remain scarce. Huang emphasized that these systems reflect the computer of the age of AI designed to assist the world’s

30 million software engineers with AI driven development. Availability for the DGX Spark begins this summer with the Station expected later in 2025 through partners like ASUS Dell and HP.

While the DGX Spark and Station push boundaries their premium pricing and specialized focus may limit mainstream adoption. For AI professionals the Station’s unmatched power could redefine local computing but the Spark’s performance to cost ratio invites comparisons to alternatives like Apple’s Mac Studio. As NVIDIA continues to shape the AI landscape these machines signal a bold step toward democratizing high performance computing albeit at a steep price.